Tech Stack for Machine Learning

Introduction

Machine learning projects aren’t just about developing models. Equally important are the infrastructure and supporting tools that ensure repeatable experiments, well-managed data, and reliable reproduction of results. Based on our experience, we recommend TensorFlow as a framework, MLflow for experiment tracking, and proper data management with DVC (Data Version Control).

Machine Learning Frameworks

When building models, the chosen framework is often the starting point. Without a framework, every matrix multiplication, convolution, or backpropagation would have to be programmed manually. A framework not only saves time but also enables efficient work on GPUs and TPUs and later allows for the deployment of models in production.

For projects where both rapid prototyping and production deployment are important, TensorFlow is the best choice. It offers Keras for simple model development and TensorFlow Serving/Lite for deployment. Alternatives such as PyTorch and JAX are powerful for research but less suitable for production. Choose this only if flexibility in research is more important than stability.

Experiment Tracking

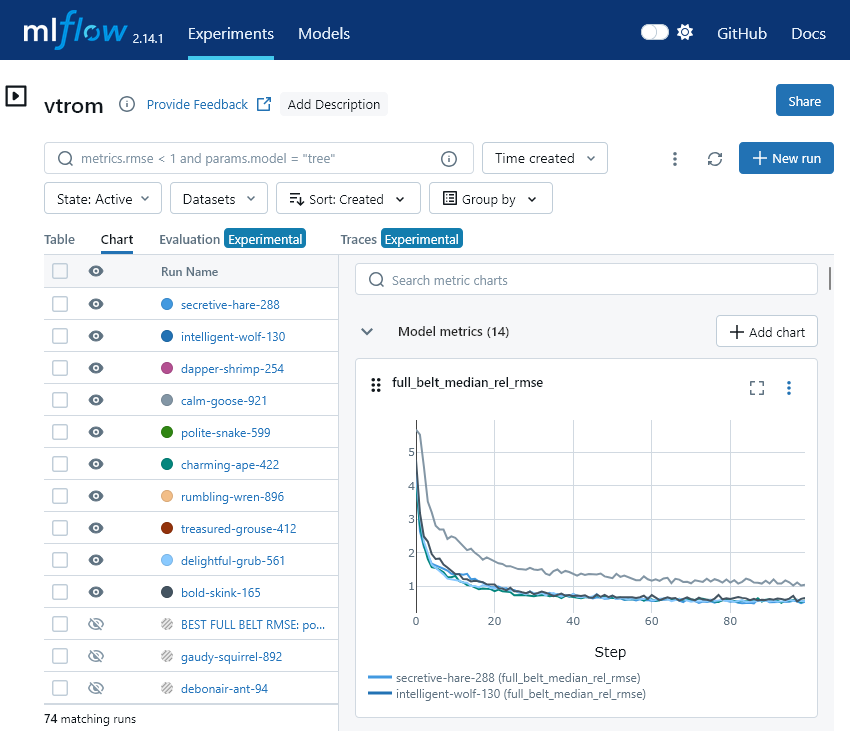

Training models sometimes involves dozens of runs with small variations in hyperparameters or architecture. Without a good system, confusion quickly arises: which settings yielded the best results, and why?

Experiment tracking software can be used to track this. We recommend MLflow for this because of its local and self-hosted capabilities. MLflow makes it easy to record and compare parameters, results, and model versions. This proves particularly valuable in situations where multiple team members work in parallel and want to share their results. Furthermore, the ability to centrally store and version models offers clear added value; it makes it easier to return to previous runs and redeploy models. While a tool like Weights & Biases offers powerful visualizations, it relies on SaaS and is less suitable for teams that prioritize privacy and control.

Our experience has taught us that experiment tracking isn’t optional but should be a core component of every ML process. It saves time, prevents confusion, and makes decisions more informed.

Data Management

Data is the foundation of every machine learning project. Without proper management, data can quickly become inconsistent, leading to unreliable models and results that are difficult to reproduce. Versioning datasets and making changes traceable make it possible to repeat experiments and validate models. Therefore, we recommend using DVC for version control of datasets and ML artifacts, and scalable storage and traceability tools such as Delta Lake or BigQuery.

Conclusion

For a robust ML workflow, we recommend:

- Framework: TensorFlow

- Experiment Tracking: MLflow

- Data Management: DVC/Delta Lake

These choices offer stability, reproducibility, and control over infrastructure, forming a solid foundation for future projects.

More information

See also our services for machine learning. Feel free to contact us if you want to know more. If you want to be kept up to date on the tools and techniques that we use, the our newsletter is a good option.